AI Leaps Forward: Robot Butlers & Nvidia's Super Brain Chip

Nvidia's Blackwell GPU unleashes massive power for trillion-parameter models. Figure AI's Figure 01 robot shows human-like interaction with OpenAI. Google DeepMind's SIMA learns general skills in virtual worlds. Explore these interconnected breakthroughs.

The AI Revolution Accelerates: Blackwell, Humanoid Robots, and Virtual World Training

We're witnessing breakthroughs that are not just incremental improvements but paradigm shifts – from robots capable of complex household chores to a silicon chip so powerful it borders on the unbelievable, and AI learning to navigate complex virtual worlds much like we do. This isn't science fiction anymore; it's the rapidly unfolding reality of 2024.

In a recent episode of the Venture Step Podcast, I touched upon several groundbreaking developments that are sending shockwaves through the tech world and beyond. My own Google Home device even chimed in, seemingly eager to join the AI discussion, a humorous reminder of how pervasive these technologies are becoming. Today, I want to expand on those conversations, diving deeper into Nvidia's awe-inspiring Blackwell architecture, the impressive human-robot interaction demonstrated by Figure AI's Figure 01 robot in collaboration with OpenAI, and Google DeepMind's innovative SIMA agent, which is learning to be a generalist AI by mastering video games.

These aren't just isolated advancements but interconnected pieces of a larger puzzle, each contributing to the acceleration in AI capabilities. Let's explore what these breakthroughs mean for business, technology, and the future of human endeavor.

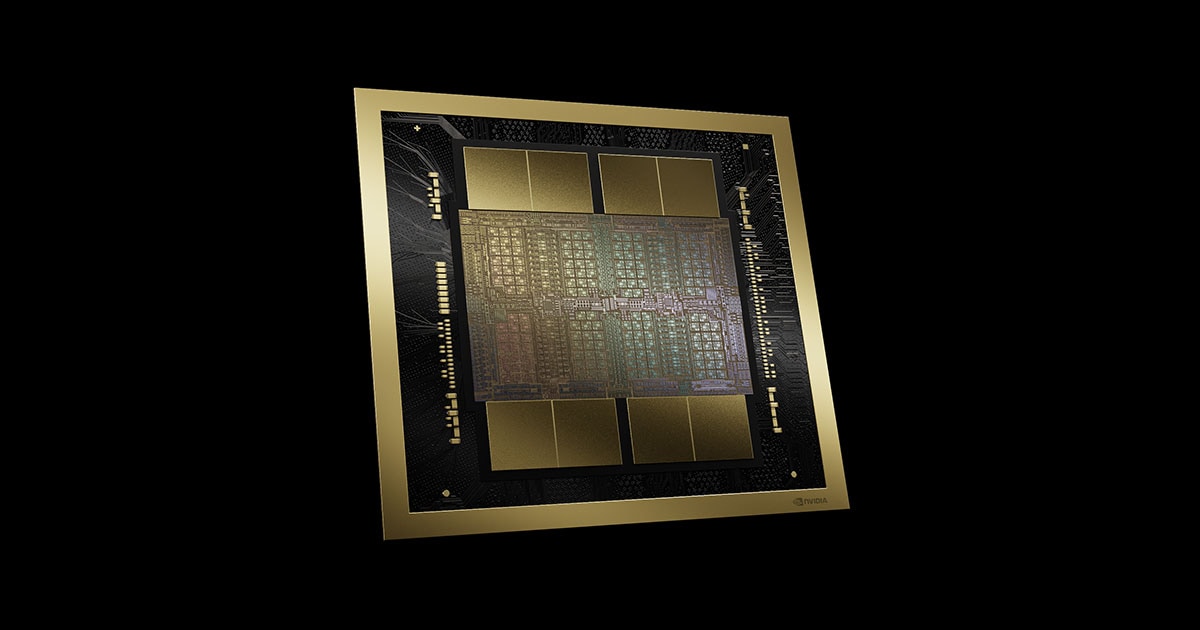

Nvidia's Blackwell: Unleashing Unprecedented AI Power

Nvidia recently held what can only be described as their "AI Day," and the show's star was undoubtedly the Blackwell B200 GPU. Calling it just a "chip" almost does it a disservice; it's a new architecture, a powerhouse designed to tackle the most demanding AI workloads imaginable.

A Quantum Leap in Computational Capacity

So, why is Blackwell such a monumental deal? Let's put its capabilities into perspective:

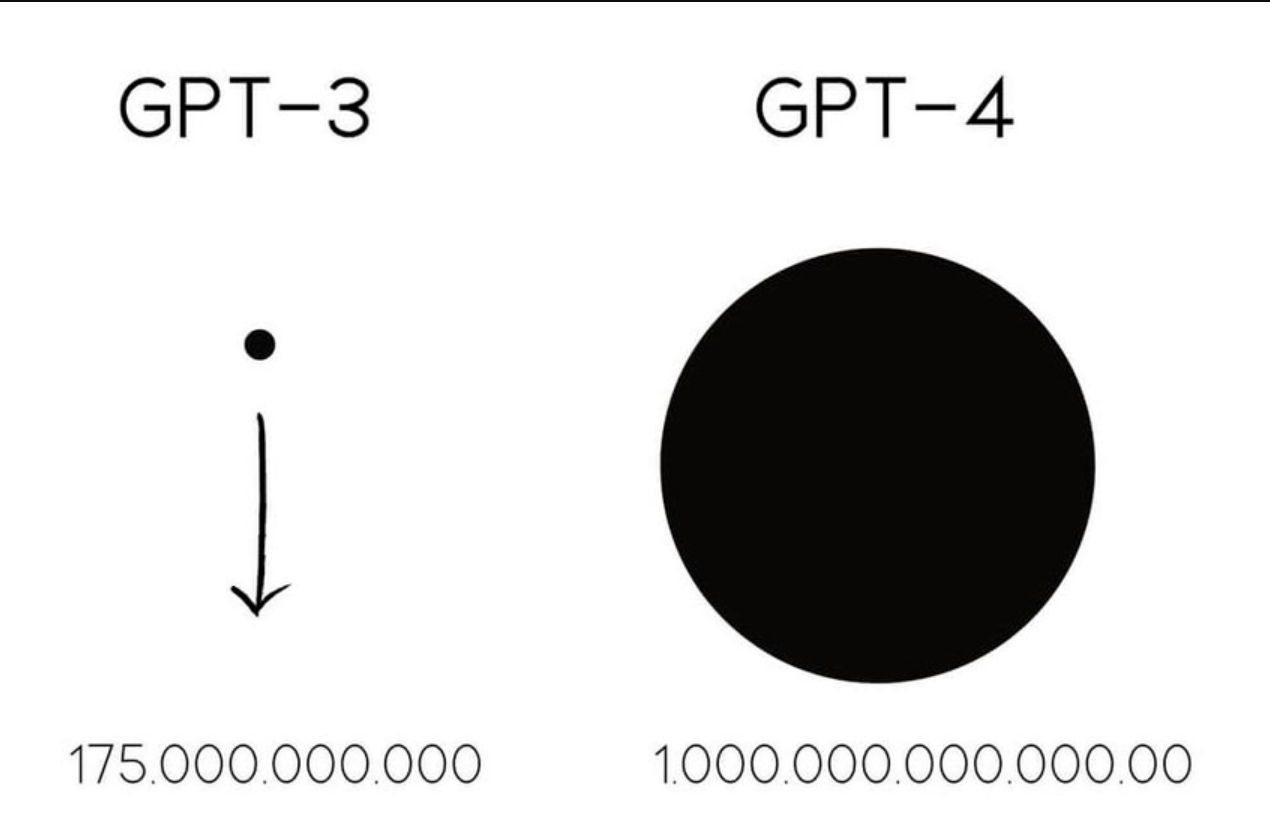

- Trillion-Parameter Models: Nvidia claims the Blackwell architecture can train AI models with up to one trillion parameters. To understand the scale of this, consider that GPT-3, a model that already transformed our interaction with AI, was trained on an estimated 175 billion parameters. While unconfirmed, industry theories suggest that GPT-4 might consist of multiple models, perhaps eight, each with around 250 billion parameters, working in concert. Blackwell's capacity to handle a single trillion-parameter model is a staggering leap forward. As I mentioned on the podcast, it's important to note that more parameters don't automatically equate to a better model, as evidenced by many efficient open-source models operating in the 60-70 billion parameter range. However, the sheer scale Blackwell enables opens doors to complexity and nuance previously out of reach.

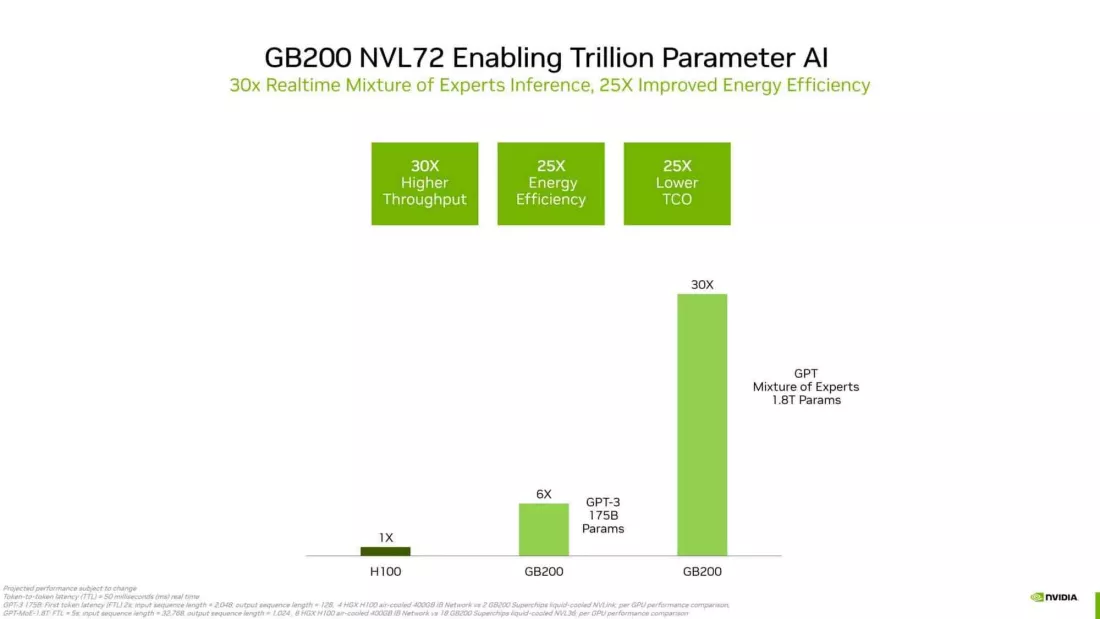

- Speed and Efficiency: Blackwell promises to be up to 30 times faster in specific AI inference workloads than its predecessor, the already formidable H100. Crucially, it also achieves this with a 25% reduction in energy consumption.

The Critical Role of Energy Efficiency

Training and running large-scale AI models are incredibly energy-intensive. The H100 systems, for instance, could draw enormous amounts of power, with some configurations heading towards multiple gigawatts. To clarify, a single gigawatt is one billion watts – enough to power millions of lightbulbs or, as a whimsical internet search might tell you, a fictional DeLorean's flux capacitor.

The operational costs associated with this power draw are substantial, forming a significant barrier to entry and scalability for many AI initiatives. Similar concerns about cryptocurrencies' energy footprint have been voiced, with comparisons made to the power consumption of entire industrialized nations. By making Blackwell significantly more energy-efficient, Nvidia is reducing the operational expenditure for companies leveraging AI and addressing a critical sustainability concern. This efficiency will be paramount as AI models become larger and more ubiquitous.

Transformative Applications Across Industries

The implications of such a powerful and efficient architecture are vast:

- Scientific Simulation: Fields like drug discovery, climate modeling, and materials science will benefit immensely from the ability to run far more complex and accurate simulations.

- Large Language Models (LLMs) and Generative AI: The next generation of LLMs will be trained on Blackwell, leading to even more sophisticated, context-aware, and creative AI assistants and tools.

- Autonomous Vehicles: As I discussed in a previous episode concerning Nvidia's supply chain, companies like Tesla have historically relied on Nvidia GPUs for their autonomous driving systems. Tesla even began developing its own chips due to supply constraints. Blackwell will undoubtedly power the next leaps in self-driving capabilities, processing vast amounts of sensor data in real-time.

- The Path to Artificial General Intelligence (AGI): While AGI is still a subject of much debate and research, Blackwell's raw power and efficiency will undoubtedly accelerate research in this domain.

Nvidia's Blackwell isn't just an upgrade; it's a foundational shift underpinning the next wave of AI innovation, potentially leading to breakthroughs we can only imagine.

Figure 01 & OpenAI: The Dawn of Truly Interactive Humanoid Robots

Imagine a robot that can understand your spoken requests, interact with its environment with human-like dexterity, and even explain its reasoning. This isn't a scene from a futuristic movie; it was a live demonstration featuring the Figure 01 robot, a product of Figure AI, working in conjunction with OpenAI.

A Glimpse into the Future of Human-Robot Collaboration

In the demo I reviewed on the podcast, the Figure 01 robot showcased an impressive array of capabilities:

- Natural Language Interaction: A human asked the robot if it could have something to eat. The robot identified an apple as the only edible item on the table and handed it over.

- Contextual Understanding and Task Execution: When the human placed some trash on the table and asked the robot to clean up handled both tasks.

- Dexterous Manipulation: The robot was then asked to put away dishes. It carefully picked up a plastic cup and plates, slotting them delicately into a drying rack, demonstrating an understanding of object properties and careful manipulation.

- Self-Assessment: When asked how it performed, the robot responded, "I think I did well. The apple found a new home, the trash is gone, and the tableware is in its place."

The robot's fluidity and seeming common-sense reasoning made this demonstration so compelling. While demos must always be viewed with a degree of healthy skepticism regarding their real-world robustness, the capabilities shown represent a significant step forward from pre-programmed automaton behavior. The collaboration with OpenAI suggests that powerful language and reasoning models are being integrated directly into the robot's operational loop, allowing for this dynamic interaction.

From Sci-Fi Trope to Practical Assistant

The potential applications are immense:

- Household Assistance: As my Nana humorously mentioned after seeing the video, the dream of a "Rosie the Robot" (from "The Jetsons") doing household chores is edging closer to reality. Such robots could be transformative for an aging population or individuals needing assistance.

- Healthcare and Eldercare: Robots could assist with patient care, provide companionship, and help with daily tasks.

- Manufacturing and Logistics: Besides current industrial robots that perform repetitive tasks, robots like Figure 01 could handle more complex, less structured assignments in factories and warehouses.

The development of robots like Figure 01, especially when powered by the kind of AI that architectures like Nvidia's Blackwell can train, signals a future where humans and robots collaborate in increasingly sophisticated ways.

Google DeepMind's SIMA: AI Learning to "Live" in Virtual Worlds

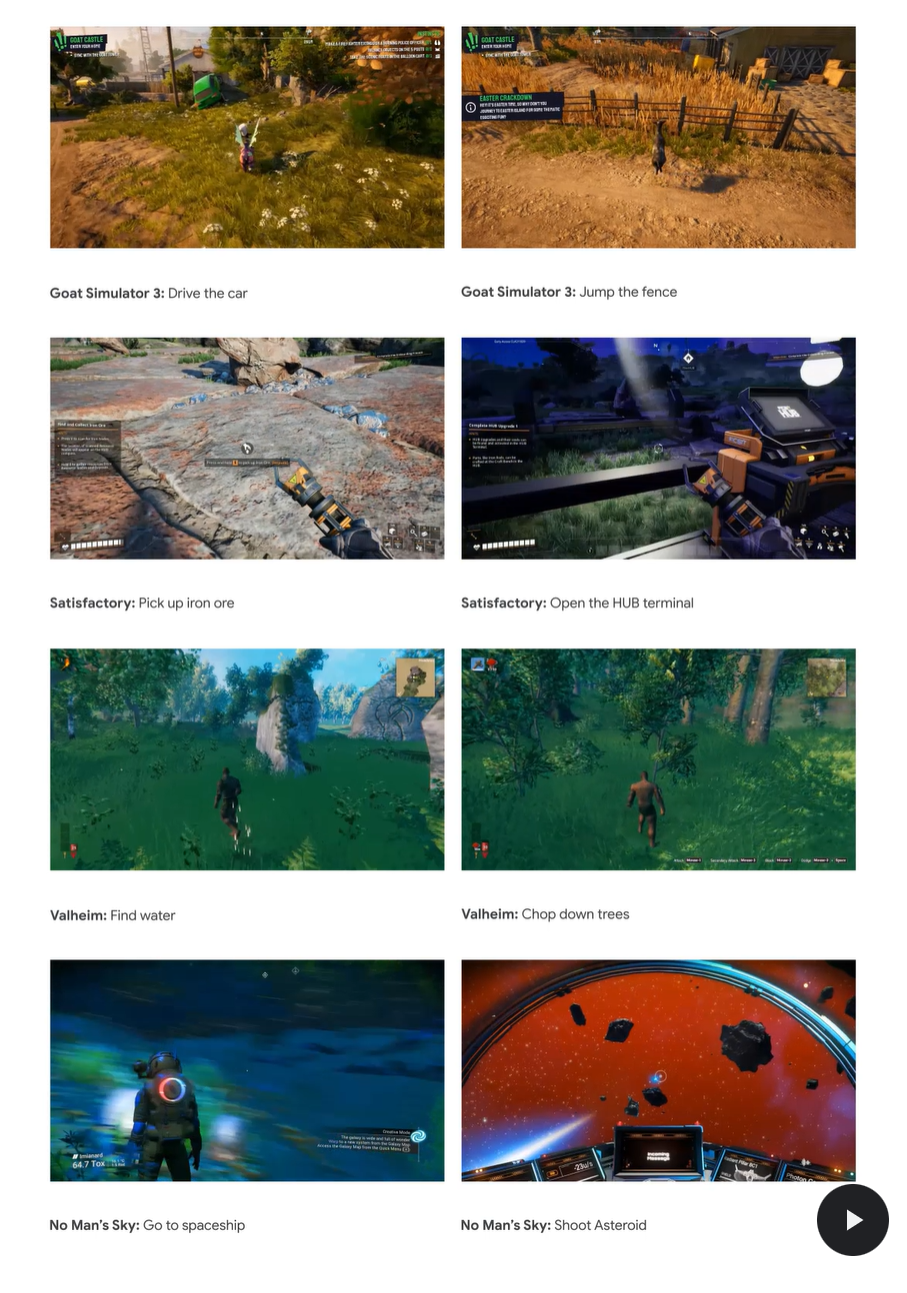

While Figure 01 learns in the physical world, Google DeepMind is taking a fascinating approach to developing more general AI capabilities by training its SIMA (Scalable Instructable Multiworld Agent) in complex virtual environments.

A Generalist Agent, Not a Specialist

The key idea behind SIMA is to create an AI agent that isn't trained for one specific task or game but can learn to follow instructions and operate across many 3D virtual environments. As I explained on the podcast, this is a departure from AI models that are highly optimized for a single purpose.

- Learning from Human Play: DeepMind researchers are training SIMA by having humans play various video games, including titles like No Man's Sky and Minecraft. The AI observes human actions and learns to correlate them with natural language instructions.

- Natural Language Instructions: Users can give SIMA text-based commands like "drive this car," "chop down this wood," or "craft a pickaxe." The agent then attempts to carry out these instructions within the game world.

- No Explicit Reward for Every Action: A crucial aspect is that a direct reward signal for every correct action doesn't always drive SIMA. Instead, it's learning to "play the game" or "live" in these environments, understanding the cause and effect of their actions in a broader sense. It learns to progress and interact purposefully, guided by instructions and observing the underlying mechanics of the worlds it inhabits.

Impressive Generalization and Future Potential

DeepMind's research indicates that SIMA is already showing promising results:

- Cross-Game Generalization: The agent can perform tasks in games it has never encountered before, demonstrating an ability to generalize its learned skills.

- Performance Metrics: While still in development, SIMA is achieving notable "relative performance" benchmarks compared to human players, both in scenarios with some prior exposure to an environment and in completely novel ones.

The potential applications of such a generalist AI are:

- Hyper-Flexible AI Assistants: AI assistants that can understand and execute a much wider range of complex, multi-step tasks based on natural language, whether in software or potentially controlling physical systems.

- Revolutionizing Game Development: This technology could lead to video games with incredibly dynamic non-player characters (NPCs) that react realistically and unpredictably, creating truly emergent narratives and endless replayability.

- Safer Training for Real-World AI: Virtual environments provide a secure and scalable way to train AI agents before deploying them in the real world, where mistakes can have serious consequences.

SIMA represents a significant step towards AI that can understand and interact with complex, dynamic environments in a more human-like way, learning through observation and instruction rather than just rigid programming.

The AI Tightrope: Navigating Progress with Responsibility

These advancements - Nvidia's Blackwell enabling vastly more powerful models, Figure 01 showcasing sophisticated human-robot interaction, and Google's SIMA learning to generalize across virtual worlds are undeniably exciting. They paint a picture of a future where AI augments human capabilities in unprecedented ways.

The rapid acceleration of AI capabilities also brings to the forefront critical ethical considerations and potential societal disruptions:

- The Specter of Misuse: Technologies like deepfakes, powered by increasingly sophisticated AI, pose risks to information integrity. We're already seeing AI-generated "influencers" online who, while not real people, garner significant followings and sponsorships, blurring the lines between authenticity and artifice.

- Job Displacement and Economic Shifts: As AI becomes more capable of performing complex cognitive tasks, questions about job displacement in various sectors, from law to creative industries, become more pressing.

- The "Consciousness" Conundrum: As AI models grow in complexity, exhibiting behaviors that mimic understanding and reasoning, philosophical questions about consciousness, sentience, and even AI "rights" inevitably arise. While we are not there yet, the development trajectory prompts us to consider these long-term implications. If an AI can perform multifaceted tasks requiring cognitive thought on par with, or exceeding, human capabilities, how do we define its place?

- Ensuring Beneficial Outcomes: The overarching challenge is to steer this powerful technology towards outcomes that genuinely improve human well-being and address global challenges, rather than exacerbating existing inequalities or creating new risks.

My Nana's excitement for a helpful robot housekeeper is a wonderful sentiment, reflecting a long-held human dream. Yet, the dystopian visions of "Black Mirror" also serve as cautionary tales. The key lies in proactive, thoughtful governance, robust ethical frameworks, and a collective commitment to responsible innovation.

The Converging Future of Intelligence

While distinct, the breakthroughs from Nvidia, Figure AI with OpenAI, and Google DeepMind are deeply interconnected. Hardware advancements like Blackwell provide the computational engine required for sophisticated AI models. These models, in turn, can power more intelligent robots like Figure 01 and versatile agents like SIMA. These various research paths enable the AI of the future to operate in the physical world; others focused on general intelligence in virtual realms contribute to a richer, more comprehensive understanding of intelligence itself.

The pace is quickening, and the potential for transformation across nearly every industry is immense. From how we conduct scientific research and design products to how we manage our daily lives and play, AI is set to redefine the human experience.

What AI news has blown your mind lately? The field is moving so fast, keeping up is challenging, but the discussions are vital.

Thank you for joining me in this deeper dive. AI's journey is one of the most fascinating and impactful narratives of our time, and on the Venture Step Podcast, we'll continue to track its progress and explore its implications.

Engage with The Venture Step Podcast:

I'm always keen to hear your thoughts. What aspects of these AI breakthroughs excite or concern you the most? Do you have a deeper analysis of the Blackwell chip, the Figure 01 robot, or Google's SIMA project?

- Share your insights in the comments on the LinkedIn or X post where you found this article.

- I'm particularly active on my YouTube channel, Dalton B Anderson, under the "VentureStep" podcast playlist.

Next week on the podcast, I'll be shifting gears to discuss a classic book, "The 48 Laws of Power," offering my take on its relevance and whether it's a worthy read for today's entrepreneurs and professionals.

Listen

Catch the full podcast episode and explore our archives on your favorite platform:

- Apple Podcasts

- Spotify

- YouTube Music

- And wherever else you get your podcasts!

- Or visit our website: https://venturestep.net/

Watch

Prefer a visual experience? Watch the podcast episodes, including the one that inspired this article, on:

- YouTube (Channel: Dalton B Anderson, Playlist: VentureStep)

- Spotify (Video Podcasts)

Thank you for your time and for being part of the Venture Step community. Whether it's morning, noon, or night, I hope you have a great day, and I'll talk to you next week.

Support

Nvidia

Figure AI

Google Deepmine